CAMERA LENS CORRECTIONS

Lens corrections help offset imperfections present in nearly every camera image. These might include darkening near the corners of the frame, otherwise straight lines appearing curved, or color fringes near edge detail. Even though these often aren't obvious in the original photo, the benefits of their removal almost always are. However, lens corrections also have the potential to make images worse if not carefully performed, and depending on the subject, some imperfections can actually be beneficial.

|

|

| Before | After |

Results after vignetting, distortion and chromatic aberration correction.

Differences would be even more apparent if viewed at 100% on-screen.

OVERVIEW

The three most common lens corrections aim to address one of the following:

- Vignetting. This appears as a progressive darkening toward the edges of the image.

- Distortion. This appears as otherwise straight lines bending inwards or outwards.

- Chromatic Aberration. This appears as color fringing along high contrast edges.

However, lens correction software is typically only able to fix certain types of each imperfection above, so being able to identify them is key. The following sections describe the types and causes of each imperfection, when correction is possible, and how to minimize imperfections in the first place.

Most lens correction software will suffice for this tutorial, but common options include Adobe Camera RAW, Lightroom, Aperture, DxO Optics and PTLens, amongst others.

1. VIGNETTING

This describes the gradual fall-off of light towards the corners of your image, and is perhaps the most straightforward lens imperfection to observe and correct.

|

|

| Internal Vignetting | Physical Vignetting |

|---|---|

|

| Vignetting Removed |

|---|

Note how internal vignetting is most objectionable only at the top left and bottom right due to the subject matter, even though the effect applies uniformly to all four corners.

Types & Causes. Vignetting can be grouped into two general categories:

- Physical Vignetting. Often not correctable except by cropping or manual brightening/cloning. Appears as a strong, abrupt darkening usually only in the very corners of an image. Caused by stacked/large filters, lens hoods or other objects physically blocking light near an image's edges.

- Internal* Vignetting. Usually easily correctable. Appears as a gradual and often subtle darkening away from the image's center. Caused by the inner workings of your particular lens and camera. It's typically most apparent at lower f-stops, with zoom and wide angle lenses, and when focusing on distant objects. Digital SLR cameras with cropped sensors are also less susceptible to vignetting because the darker edges get cropped out (when using full-frame lenses).

*Technical Note: Internal vignetting is comprised of two sub-categories: optical and natural vignetting. The first can be minimized by stopping down your lens (using a higher f-number), but the second is independent of lens setting. Natural vignetting is therefore unavoidable, unless one is able to use a lens with a narrower angle of view, or a specially-designed corrective filter that discards light toward the image's center (uncommon except perhaps with large format cameras).

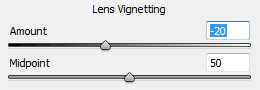

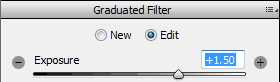

vignetting correction sliders in photoshop

Correction. Vignetting can often be fixed using a single "amount" slider, although you can sometimes also adjust the center of the vignetting correction using a "midpoint" slider (but this is rarely needed). However, correction will increase image noise toward the corners, because digitally brightening an image amplifies both the signal and the noise equally.

Artificial Vignetting. Some photographers actually add vignetting to their images in order to draw attention to a central subject, and to make the frame's edges appear less abrupt. However, you'll want to do this by applying the vignetting after any image cropping (sometimes called "post crop vignetting").

2. DISTORTION: BARREL, PINCUSHION & PERSPECTIVE

This can give otherwise straight lines the appearance of bending inward or outward, and can influence the depiction of depth:

Types & Causes. The most common categories of image distortion include:

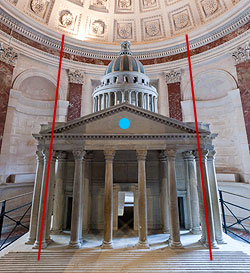

Perspective "Distortion"

Perspective "Distortion"

Blue dot represents direction of camera;

red lines mark converging parallel lines.

- Pincushion Distortion. Appears when otherwise straight lines curve inward. Typically caused by telephoto lenses, or at the telephoto end of a zoom lens.

- Barrel Distortion. Appears when otherwise straight lines curve outward. Typically caused by wide angle lenses, or at the wide end of a zoom lens.

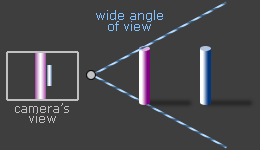

- Perspective Distortion*. Appears when otherwise parallel lines converge. Caused by the camera not facing these parallel lines perpendicularly; with trees and architecture, this usually means that the camera isn't pointed at the horizon.

With landscapes, distortion of the horizon and trees are usually most detectable. Placing the horizon along the center of a photo can help minimize the appearance of all three types of distortion.

*Technical Note: Perspective distortion isn't technically a true distortion because it is a natural characteristic of 3D vision. We see it with our own eyes, but our mind knows the correct 3D positioning of objects and therefore doesn't perceive these lines as converging. For more, also see the tutorials on wide angle lenses and using tilt/shift lenses to control perspective.

Correction. Fortunately, each of the above types of distortion is correctable. However, this should only be performed when necessary, such as with subjects that include straight lines or are highly geometric. Architectural photography is often most sensitive, for example, whereas many landscapes aren't noticeably affected.

distortion correction sliders in photoshop

Editing software usually provides sliders for barrel/pincushion and perspective distortion in the vertical and horizontal directions. However, make sure to use the grid overlay feature (if available) so that you can more easily assess whether editing has made lines straight and parallel.

Disadvantages. Distortion correction usually requires cropping out curved edges of the corrected frame, which can influence composition. Correction also redistributes an image's resolution; with pincushion removal, the edges will appear slightly sharper (at the expense of the center), whereas with barrel removal the center will instead appear slightly sharper (at the expense of the edges). With wide angle lenses, barrel distortion is actually a helpful way of offsetting the corner softening that is common with these lenses, for example.

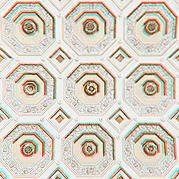

3. CHROMATIC ABERRATIONS

Chromatic aberration (CA) appears as unsightly color fringes near high contrast edges. Unlike the other two lens imperfections, chromatic aberrations are typically only visible when viewing the image on-screen at full size, or in large prints.

Correction above effective because CA consisted primarily of the easily removable lateral type.

Types & Causes. Chromatic aberration is perhaps the most varied and complicated lens imperfection, and its prevalence is highly dependent on the subject matter. Fortunately, CA can be more easily understood by separating it out into at least three phenomena:

Technical Notes: Pure lateral CA happens when the color constituents of an image are captured at different relative sizes (but are all in sharp focus). With pure axial CA, the color constituents are the same relative size, but some are out of focus. With blooming, both of these may happen, but at the small-scale of the sensor's microlens instead of image-wide at the camera lens.

- Lateral Chromatic Aberrations. The most easily correctable. Appears as opposing dual-color fringing along a radial direction from the image's center, and increasingly so near the corners of an image. Colors are often cyan/magenta, along with potentially a blue/yellow component.

- Axial Chromatic Aberrations. Uncorrectable, or only partially with adverse effects elsewhere. Appears as a single-colored halo around all sides of high contrast detail, and varies less with image position. The halo is often purplish, but its color and size can sometimes be improved by slight front or back-focusing the lens.

- Sensor Blooming. Usually uncorrectable. A unique phenomenon of digital sensors that causes spillover highlight clipping — producing highly varied sensor-level color fringing that usually appears blue or purplish. It's most prevalent with sharp, clipped specular highlights on high resolution compact cameras. The classic example is the edges of tree tops and foliage against a bright white sky.

All images are comprised of some combination of the above types, although their relative prevalence can vary dramatically depending on image content and camera lens. Both lateral and axial CA are more apparent with inexpensive lenses, whereas blooming is more apparent with older compact cameras; all are more visible at higher resolutions.

Note: Although axial CA and blooming typically occur uniformly around all edges, they may not appear equally in all directions, depending on the color and brightness of that particular edge. This can often cause these to be mistaken for lateral CA. Lateral and axial CA are sometimes also called transverse and longitudinal CA, respectively.

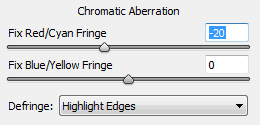

chromatic aberration sliders in photoshop

Correction. Reducing chromatic aberrations can make an enormous difference in sharpness and image quality — especially near the edges of your frame. However, only some components of CA can be mostly removed. The trick is to identify and apply the appropriate tools for each component separately — without making the others worse. For example, reducing axial CA in one region (by mistakenly using lateral CA tools) will usually make its appearance worse in other regions.

Start by using a high contrast edge near the image's corner, and view at 100-400% on-screen to gauge correction effectiveness. It is often best to start with lateral CA, using the red/cyan then blue/yellow adjustment sliders, since this is most easily removed. Then, whatever remains has to be the combined result of axial CA and sensor blooming, which can be reducing using the "Defringe" tool in photoshop. Regardless of which settings you start off with though, the key is to experiment.

Original at 400%

Original at 400% After Lateral CA

After Lateral CARemoved

After Blooming &

After Blooming &Axial CA Reduced

Crop is from the upper left of the water sunset image above.

Don't expect miracles though; some blooming and axial CA will almost always remain. This is especially true with light sources at night, stars and direct reflections off of metal or water.

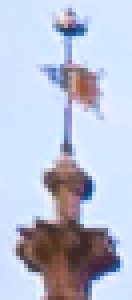

AUTOMATED LENS CORRECTION PROFILES

Modern RAW development software often has the ability to perform lens correction using pre-calibrated parameters for a broad range of camera and lens combinations. This can be an enormous timesaver if available. Adobe Camera RAW (ACR), Lightroom, Aperture, DxO Optics and PTLens all have this capability in their most recent version.

Don't be afraid to change these from their default of 100% (full correction). Some might prefer to retain a little vignetting and distortion, but to fully correct for chromatic aberrations, for example. With CA though, best results are ordinarily achieved by followed this up with manual fine-tuning.

If you're using lens correction as part of your photo editing workflow, the order in which it's performed can impact results. Noise reduction is usually more effective prior to CA removal, but sharpening should be performed afterwards since this can harm CA removal. If you're using RAW development software though, don't worry about the order since these will be intelligently applied.

FURTHER READING

For similar topics, also visit the following tutorials:

- Digital Photo Editing Workflow

A good way to see how lens corrections fit in amongst all the other editing steps. - Camera Lens Quality: MTF, Resolution & Contrast

An overview of all other lens aspects contributing to lower image quality. - Understanding Camera Lenses

Includes an interactive lens quality visualization tool at the start.

MOON & NIGHT PHOTO CALCULATOR

Planning is more important than ever with moon, night and astrophotography, in part because the desired conditions may only arise a few times a year. The following calculator can be used for guidance:

Calculator in part based on formulae in the book Astronomical Algorithms by Jean Meeus.

Not intended for use within the Arctic or Antarctic circles.

Sky darkness results are for tonight through to tomorrow morning.

INSTRUCTIONS

Your current location and time zone have likely already been detected. If you want to try somewhere else, you can either (i) drag and drop the marker or (ii) enter a landmark name and/or address in the search box and click "Find Location." The results will then update automatically using your current time settings.

Example usage scenarios:

Astrophotography. The key here is knowing when the sky is as dark as possible. Use the calculator to ensure that (i) you are within astronomical twilight and (ii) the moon either hasn't risen yet or is a new moon.

Moonlit Nightscapes. The key here is knowing when you'll have the desired ground lighting. Use the calculator to ensure that (i) the time is an hour after moonrise or an hour before moonset (often ideal), (ii) this coincides with nighttime, and (iii) the moon is near a full moon to give shorter exposure times.

Lunar Photography. The key here is knowing when the moon itself appears as desired. Use the calculator to ensure that (i) the moon has risen during nighttime and (ii) the moon is at the phase you want to photograph (such as a crescent if you're after a moody cloud-shrouded shot, or gibbous to bring out its surface detail).

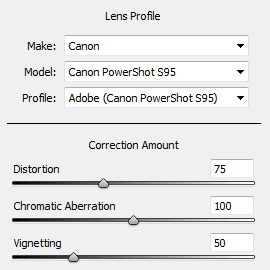

PHASES OF THE MOON

Typical lunar nomenclature over its 29.5 day cycle:

Despite all of the names, there's really just two concepts to remember:

- Size. The terms gibbous and crescent specify whether the moon is greater than or less than half illuminated, respectively. The special cases of new moon and full moon refer to no illumination and full illumination.

- Phase Direction. The terms waxing and waning specify whether the illuminated portion of the moon is growing or shrinking, respectively.

NOTES

Midnight. This describes when the sun is farthest below the horizon, and corresponds with when the sky is darkest. Whenever there is no sunrise or sunset, such as near the poles in summer and winter, this describes the time of day when the sky is least bright.

Astronomical Twilight. This describes when the sky becomes completely black and is suitable for astronomical observations of faint stars. It corresponds with when the sun dips to 18° below the horizon. However, at high latitudes during summer, the sun may never actually dip this far below the horizon.

Moonrise & Moonset. These represent when the upper edge of the moon's disc disappears below the horizon — regardless of whether that portion of the disc is currently illuminated. Just as with sunrise and sunset, side lighting from the moon will cast long shadows and often appear most striking, so the hour after moonrise and before moonset may give optimal light for nightscapes. Unlike sunrise and sunset though, the moon rise and set times are typically 50 minutes later each day.

FURTHER READING

For similar topics, also visit the following tutorials:

- Sunset, Sunrise & Twilight Calculator

This one is more useful for daylight photography. - Intro & Common Obstacles in Night Photography

Gives an overview of the unique challenges for photography at night. - Making the Most of Natural Light in Photography

Discusses how different times of day affect subject appearance.

SUNRISE & SUNSET PHOTO CALCULATOR

Planning when to be on location for the right light can make a tremendous difference in your photography. The following daylight calculator can be used for guidance:

Calculator in part based on formulae in the book Astronomical Algorithms by Jean Meeus.

Results assume an unobstructed view of a planar horizon.

INSTRUCTIONS

Your current location and time zone have likely already been detected. If you want to try somewhere else, you can either (i) drag and drop the marker or (ii) enter a landmark name and/or address in the search box and click "Find Location." The results will then update automatically using your current time settings.

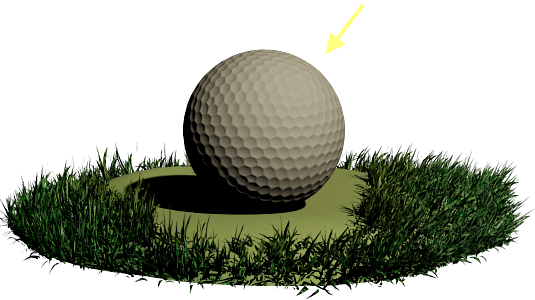

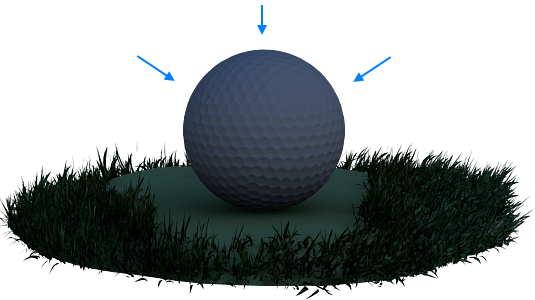

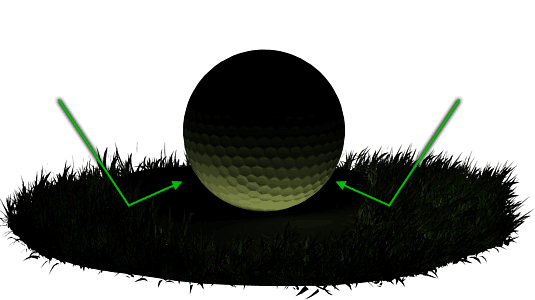

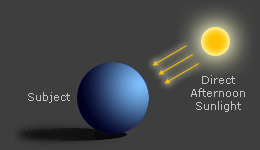

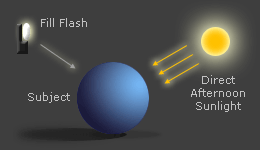

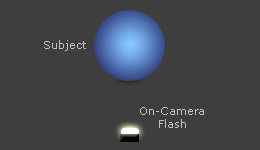

TIME OF DAY & SUBJECT APPEARANCE

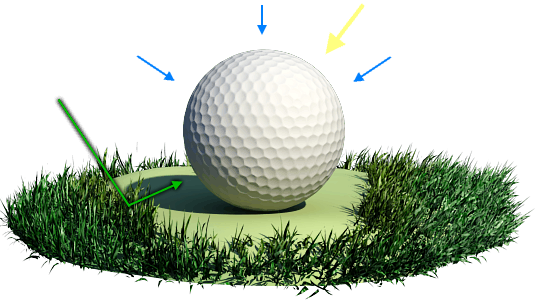

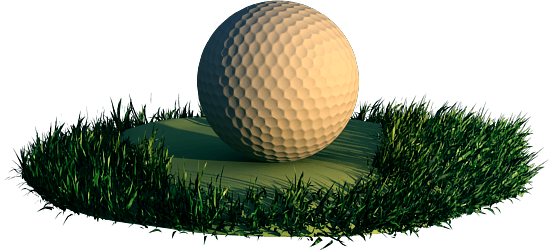

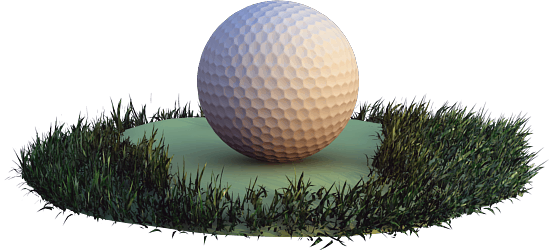

The color diagram gives a rough depiction of how the color temperature of sunlight transitions between each of the marked times, presuming clear skies.

Depiction of light as the day progresses from midday to dusk (or reverses towards dawn).

For locations near the poles, the above representation may no longer be representative.

Note how the contrast, direction and color temperature changes the appearance of the sphere as the day progresses. For more on types of light, also refer to the tutorial on natural light in photography.

NOTES

Accuracy. What we really see during sunrise and sunset is the sun's apparent position — not its actual position. The apparent position is determined by how the sun's image gets refracted as viewed through the atmosphere, similar to how objects underwater appear shifted relative to their actual position. Therefore, the times above may differ from actual times by up to 5 minutes, depending on weather properties such as air temperature, humidity and turbulence.

Midday Time. This describes when the sun is highest in the sky, and is often also referred to as "high noon" or "astronomical noon." For situations when there is no sunrise or sunset, such as near the poles in summer and winter, this describes the time of day when the sky is least dark (and the sun is closest to the horizon).

Sunrise & Sunset. These represent when the upper edge of the sun's disc disappears below the horizon. During this time the sky may become an intense fiery red or pink, depending on cloud cover. The hour after sunrise and before sunset is commonly referred to as the "golden hour," since this is often when sunlight appears as a rich orange.

Dawn & Dusk. These represent the start and end of civil twilight, respectively (when the sun crosses 6° below the horizon). Unlike sunrise and sunset, these times aren't necessarily visually well-defined. In general though, twilight is when a clear sky is still bright and depicts colors other than a dark blue.

UNIQUE SCENARIOS NEAR THE POLES

At locations near the poles, you may notice that sunrise/sunset or dawn/dusk is listed as "never" and a popup message appears. These scenarios might include:

- Perpetual Daylight. The sun remains above the horizon throughout the day, in which case both sunrise/sunset and dawn/dusk will be listed as "never." This can happen very close to the poles and/or near the summer equinox.

- Perpetual Nighttime. The sun remains more than 6° below the horizon throughout, in which case both sunrise/sunset and dawn/dusk will be listed as "never." This can happen very close to the poles and/or near the winter solstice.

- Perpetual Twilight. The sun remains below the horizon throughout the day, but never dips lower than 6° below the horizon. Both sunrise/sunset and dawn/dusk will be listed as "never." This can happen near the poles in the spring and fall.

- Never Brighter than Twilight. At midday the sky is bright but the sun never rises above the horizon; at midnight the sky becomes dark since the sun dips at least 6° below the horizon. Only sunrise and sunset will be listed as "never."

- Never Darker than Twilight. At midday the sun is high in the sky, but at midnight the sun never dips more than 6° below the horizon, so the sky remains relatively bright. Only dawn and dusk will be listed as "never."

Very high latitudes may also have more than one sunrise or sunset in a single day, but this calculator only mentions the first of each occurrence.

FURTHER READING

For similar topics, also visit the following tutorials:

- Moon & Night Photography Calculator

This one is more useful for night photography. - Making the Most of Natural Light in Photography

Discusses how different times of day influence subject appearance. - Understanding White Balance

Discusses the types and terminology of light's color temperature.

NATURAL LIGHT IN PHOTOGRAPHY

Paying more attention to light is perhaps the single most important step you can take to improve your photography. With many landscapes, having good natural lighting can even be more important than the choice of subject itself. Different types of natural light can also produce a wide variety of subject appearances — even though these all have the same light source. Learn how to achieve the right light for your subject by utilizing the unique qualities of your particular time of day and weather.

Three factors influence how natural light renders a subject: time of day, camera direction and weather. We'll first explore time of day under clear skies, then move onto specific weather conditions; lighting directions will be saved for a future tutorial.

OVERVIEW

Even though all natural light originates from the sun, a subject's illumination is actually comprised of several components:

| Direct Sunlight (warmer, high contrast) |

Diffuse Skylight (cooler, low contrast) |

Bounced Light (has qualities of reflecting object) |

Move your mouse over each lighting component above to isolate its effect.

Depending on the time of day, the relative amount of each component changes — resulting in an overall illumination with a different white balance or contrast. We'll start with astronomical high noon (when the sun is at its highest), then see what happens as the day progresses to sunset (or reverses to sunrise).

| Time of Day | Contrast | Colors | Direction of Sun | |

|---|---|---|---|---|

| 1. Midday | → | Highest | Neutral White | Near Vertical |

| 2. Evening & Morning | → | High | Slightly Warm | Mid to Low |

| 3. Golden Hour & Sunrise/Sunset | → | Medium | Warm to Fiery | Near Horizontal |

| 4. Twilight, Dawn & Dusk | → | Low | Cool Pastel | Below Horizon |

note: the contrast characteristics are intended only for clear skies

Time of Day. Further from high noon, the sun dips closer to the horizon. This results in lower contrast, because sunlight has to pass through more atmosphere, and more easily bounces off the ground toward the subject. In addition, the atmosphere selectively filters more of the sun's blue light — resulting in warmer light overall.

Weather. Along with time of day, the type and extent of cloud cover is the other most influential cause of lighting variation. It primarily influences lighting because it changes the balance between direct sunlight and diffuse skylight, which in turn affects the apparent contrast and color temperature of the light source. We'll discuss this more at the end.

CLEAR MIDDAY SUNSHINE

Midday lighting is primarily comprised of direct, downward sunlight. Such light has little chance to scatter and diffuse through the atmosphere, or to bounce off the ground and illuminate the subject indirectly. This results in the hardest and most neutrally-colored lighting of any time of day, and is typically the least desirable type of natural light.

Due to these drawbacks, too often photographers put their camera away — potentially missing unique opportunities. For example, water may appear more transparent, since light penetrates deeper and direct reflections off the surface are less likely. Alternatively, other types of photographs are more about capturing a particular event, as opposed to achieving an image with optimal lighting.

Overcoming Unique Challenges. Just be aware that color saturation is typically lower, and that downward shadows generally don't produce flattering portraits, or make other subjects appear as three-dimensional. Many photographers encourage liberal use of polarizing filters to manage contrast, since this is often when they're most impactful, but at this time these can also more easily make the sky appear unnaturally dark and blue. If shadows appear too harsh and colors aren't sufficiently saturated, try converting to black and white, since these may even benefit from the high contrast of midday light.

EVENING & MID-MORNING

Evening and mid-morning light becomes slightly warmer, and begins to cast noticeable shadows. Since direct light now originates from an upper side, subjects often appear much more three dimensional. Such lighting is usually much more predictable than sunsets and sunrises, primarily because this time is less dependent on the effect of surrounding mountains, or the location of the cloud line.

Overcoming Unique Challenges. Mid-evening and morning has perhaps the most compromised lighting: it's not as neutrally colored as during midday, but also not as warm or intense as a sunset. It's also less harsh and originates from a better angle than during midday, but also isn't as soft and diffuse as during twilight or overcast lighting. These qualities make it a good all-around time of day for photography, but also run the risk of making photos appear too ordinary, since one cannot use any uniquely exaggerated lighting traits to emphasize particular features in their subject.

GOLDEN HOUR & SUNRISE/SUNSET

The hour just before sunset and just after sunrise (the "golden hour") is typically regarded as having the most desirable light for photography. This is characterized by horizontal light that casts long shadows and gives subjects a warm glow.

Sunsets and sunrises make for exciting and highly varied lighting, primarily because these are heavily influence by subtleties in the weather. Clouds are rendered using sunlight which reflects off them from underneath — as opposed to sunlight which has diffused through them from above — potentially causing the sky to light up with a soft, warm light.

Overcoming Unique Challenges. Sunsets and sunrises are often spectacularly vibrant in person, but this isn't always translated well into an image. Make sure that your camera's auto white balance doesn't counteract an otherwise warm-looking scene, or that the color saturation isn't overly conservative to minimize the risk of color clipping. Ironically, when the lighting is most dramatic is also when your camera is most likely to make an error with its exposure; try to take several photos, or use partial or spot metering just in case.

Sunrise vs. Sunset. Although sunsets and sunrises are in theory identical, weather patterns can cause these to be consistently different, so many photographers prefer one over the other. Some find that they're more prepared to photograph during sunset over sunrise, because light quality builds steadily prior to a sunset — whereas with sunrises, the light often starts at its best and gradually fades. In addition, being awake and on-location for a sunrise is often impractical in the summer months. On the other hand, sunrise photography is usually void of potentially distracting crowds, and more often has a low-laying mist and dew on foliage. Sunrises often also have a calm, quiescent quality — particularly with scenes involving water — that isn't present during sunsets.

TWILIGHT, DAWN & DUSK

Twilight, dawn and dusk typically describe the half hour before sunrise or after sunset — when the sky is still bright but there's no longer any direct sunlight. The primary source of light effectively becomes the entire sky, with one side appearing warm and reddish and the other becoming a cool blue or purple. This can produce wonderfully soft, multicolored lighting that gives a calm, peaceful mood to subjects.

Overcoming Unique Challenges. Perhaps the biggest disadvantages are the lack of contrast and ambient light. Hand-held shots are therefore rarely possible, and achieving a sufficient sense of depth may require more attention to composition. Cameras also often over-expose twilight scenes when using automatic exposures — potentially washing out the otherwise delicate colors — since twilight almost never contains any fully white objects.

Alpenglow. If you're lucky, a phenomenon called "alpenglow" may appear as a red or pinkish glow in the sky furthest from the setting sun, but it's never a guarantee. Alpenglow can be a helpful effect for extending a sky's warmth well beyond sunset.

SHADE & OVERCAST SUNLIGHT

Shade and overcast light typically have a cool, soft appearance, since the source of such light is spread across the entire sky, and doesn't include any direct sunlight. Textures therefore appear much subtler, and reflections on smooth surfaces are more diffuse and subdued. The color of such light is also more heavily influenced by bounced light from nearby objects, so subjects shaded by foliage can even incur a greenish tint.

Many photographers shy away from this type of lighting, but doing so is often a mistake. For example, depending on the degree of cloud cover, bright overcast light can actually be ideal for outdoor portraits and wildlife (as long as the cool white balance is corrected), since it doesn't cast harsh shadows across the subject's face. Bright overcast light may also enhance close-up photography, such as with flowers, since the appearance and saturation of colors usually improve. Alternatively, low contrast light can also be better when the subject itself is high in contrast, such as subjects containing both dark and light colors.

Overcoming Unique Challenges. A common trick is to keep the gray sky out of the photo — unless the clouds are particularly moody and highly textured. Since shadows play much less of a role, achieving a sufficient sense of depth may be difficult — just as during twilight — but this time one also doesn't have the appealing pastel lighting to compensate. Images straight out of the camera often appear more bluish than desired, so shooting in RAW and adjusting the white balance afterwards is also encouraged. Liberal use of the levels tool and curves tool may also be helpful if one wishes to use the full contrast range in a print.

OTHER SPECIFIC WEATHER CONDITIONS

Weather is effectively just a massive filter that lies between the sun and your subject. At one extreme, light could be relatively warm and highly localized, such as sunlight from a clear sky. At the other extreme, light could be cooler and envelop the subject, such as diffuse sunlight through a densely overcast sky. The thickness and extent of cloud cover is what decides where in this continuum your particular weather will have its effect.

When the sky is partly cloudy, one can effectively use the sky to paint their scene with light — if one is willing to wait for just the right moment. This is an excellent and often overlooked opportunity, especially during the middle of the day.

Alternatively, stormy weather can produce extremely high contrast light since rain clears the air of haze and dust. Sunsets after a storm are also often the most dramatic, in part because the sky can become much darker than the land — providing a nice high contrast backdrop for front-lit subjects. This is also when rainbows are most likely to appear.

Other scenarios include photography in the fog, mist or haze. This not only greatly decreases light's contrast — just as during an overcast day — but also does so progressively for more distant objects.

FURTHER READING

We also have an online tool which will automatically calculate your times and direction:

Sunrise, Sunset & Twilight Calculator for Photography

For similar topics, also visit the following tutorials:

- Understanding White Balance

Discusses the types and terminology of light's color temperature. - Introduction to Portrait Lighting: One Light Source

Understand how light influences the appearance of a portrait. - Photography in Fog, Mist or Haze

Learn how these unique weather conditions influence lighting. - Common Obstacles in Night Photography

An introduction to some of the challenging technical hurdles.

CAMERAS vs. THE HUMAN EYE

Why can't I just point my camera at what I'm seeing and record that? It's a seemingly simple question. It's also one of the most complicated to answer, and requires delving into not only how a camera records light, but also how and why our eyes work the way they do. Tackling such questions can reveal surprising insights about our everyday perception of the world — in addition to making one a better photographer.

|

VS. |  |

INTRODUCTION

Our eyes are able to look around a scene and dynamically adjust based on subject matter, whereas cameras capture a single still image. This trait accounts for many of our commonly understood advantages over cameras. For example, our eyes can compensate as we focus on regions of varying brightness, can look around to encompass a broader angle of view, or can alternately focus on objects at a variety of distances.

However, the end result is akin to a video camera — not a stills camera — that compiles relevant snapshots to form a mental image. A quick glance by our eyes might be a fairer comparison, but ultimately the uniqueness of our visual system is unavoidable because:

What we really see is our mind's reconstruction of objects based on input provided by the eyes — not the actual light received by our eyes.

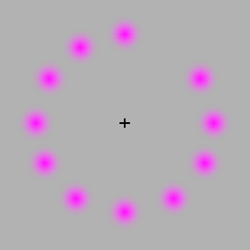

Skeptical? Most are — at least initially. The examples below show situations where one's mind can be tricked into seeing something different than one's eyes:

False Color

False Color

Mach Bands

Mach Bands

False Color: Move your mouse onto the corner of the image and stare at the central cross. The missing dot will rotate around the circle, but after a while this dot will appear to be green — even though no green is actually present in the image.

Mach Bands: Move your mouse on and off of the image. Each of the bands will appear slightly darker or lighter near its upper and lower edges — even though each is uniformly gray.

However, this shouldn't discourage us from comparing our eyes and cameras! Under many conditions a fair comparison is still possible, but only if we take into consideration both what we're seeing and how our mind processes this information. Subsequent sections will try to distinguish the two whenever possible.

OVERVIEW OF DIFFERENCES

This tutorial groups comparisons into the following visual categories:

The above are often understood to be where our eyes and cameras differ the most, and are usually also where there is the most disagreement. Other topics might include depth of field, stereo vision, white balancing and color gamut, but these won't be the focus of this tutorial.

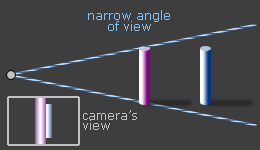

1. ANGLE OF VIEW

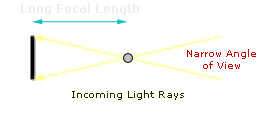

With cameras, this is determined by the focal length of the lens (along with the sensor size of the camera). For example, a telephoto lens has a longer focal length than a standard portrait lens, and thus encompasses a narrower angle of view:

Unfortunately our eyes aren't as straightforward. Although the human eye has a focal length of approximately 22 mm, this is misleading because (i) the back of our eyes are curved, (ii) the periphery of our visual field contains progressively less detail than the center, and (iii) the scene we perceive is the combined result of both eyes.

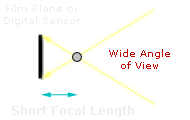

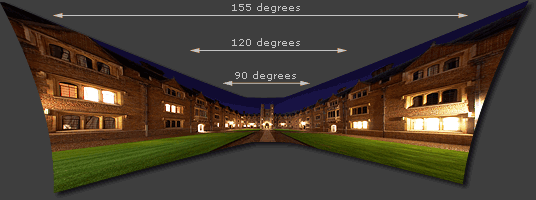

Each eye individually has anywhere from a 120-200° angle of view, depending on how strictly one defines objects as being "seen." Similarly, the dual eye overlap region is around 130° — or nearly as wide as a fisheye lens. However, for evolutionary reasons our extreme peripheral vision is only useful for sensing motion and large-scale objects (such as a lion pouncing from your side). Furthermore, such a wide angle would appear highly distorted and unnatural if it were captured by a camera.

|

||

| Left Eye | Dual Eye Overlap | Right Eye |

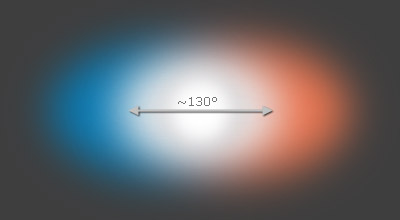

Our central angle of view — around 40-60° — is what most impacts our perception. Subjectively, this would correspond with the angle over which you could recall objects without moving your eyes. Incidentally, this is close to a 50 mm "normal" focal length lens on a full frame camera (43 mm to be precise), or a 27 mm focal length on a camera with a 1.6X crop factor. Although this doesn't reproduce the full angle of view at which we see, it does correspond well with what we perceive as having the best trade-off between different types of distortion:

Wide Angle Lens

Wide Angle Lens(objects are very different sizes)

Telephoto Lens

Telephoto Lens(objects are similar in size)

Too wide an angle of view and the relative sizes of objects are exaggerated, whereas too narrow an angle of view means that objects are all nearly the same relative size and you lose the sense of depth. Extremely wide angles also tend to make objects near the edges of the frame appear stretched.

(if captured by a standard/rectilinear camera lens)

By comparison, even though our eyes capture a distorted wide angle image, we reconstruct this to form a 3D mental image that is seemingly distortion-free.

2. RESOLUTION & DETAIL

Most current digital cameras have 5-20 megapixels, which is often cited as falling far short of our own visual system. This is based on the fact that at 20/20 vision, the human eye is able to resolve the equivalent of a 52 megapixel camera (assuming a 60° angle of view).

However, such calculations are misleading. Only our central vision is 20/20, so we never actually resolve that much detail in a single glance. Away from the center, our visual ability decreases dramatically, such that by just 20° off-center our eyes resolve only one-tenth as much detail. At the periphery, we only detect large-scale contrast and minimal color:

Qualitative representation of visual detail using a single glance of the eyes.

Taking the above into account, a single glance by our eyes is therefore only capable of perceiving detail comparable to a 5-15 megapixel camera (depending on one's eyesight). However, our mind doesn't actually remember images pixel by pixel; it instead records memorable textures, color and contrast on an image by image basis.

In order to assemble a detailed mental image, our eyes therefore focus on several regions of interest in rapid succession. This effectively paints our perception:

The end result is a mental image whose detail has effectively been prioritized based on interest. This has an important but often overlooked implication for photographers: even if a photograph approaches the technical limits of camera detail, such detail ultimately won't count for much if the imagery itself isn't memorable.

Other important differences with how our eyes resolve detail include:

Asymmetry. Each eye is more capable of perceiving detail below our line of sight than above, and their peripheral vision is also much more sensitive in directions away from the nose than towards it. Cameras record images almost perfectly symmetrically.

Low-Light Viewing. In extremely low light, such as under moonlight or starlight, our eyes actually begin to see in monochrome. Under such situations, our central vision also begins to depict less detail than just off-center. Many astrophotographers are aware of this, and use it to their advantage by staring just to the side of a dim star if they want to be able to see it with their unassisted eyes.

Subtle Gradations. Too much attention is often given to the finest detail resolvable, but subtle tonal gradations are also important — and happen to be where our eyes and cameras differ the most. With a camera, enlarged detail is always easier to resolve — but counter-intuitively, enlarged detail might actually become less visible to our eyes. In the example below, both images contain texture with the same amount of contrast, but this isn't visible in the image to the right because the texture has been enlarged.

Fine Texture

Fine Texture(barely visible)

Enlarged 16X

Coarse Texture

Coarse Texture(no longer visible)

3. SENSITIVITY & DYNAMIC RANGE

Dynamic range* is one area where the eye is often seen as having a huge advantage. If we were to consider situations where our pupil opens and closes for different brightness regions, then yes, our eyes far surpass the capabilities of a single camera image (and can have a range exceeding 24 f-stops). However, in such situations our eye is dynamically adjusting like a video camera, so this arguably isn't a fair comparison.

|

|

|

| Eye Focuses on Background | Eye Focuses on Foreground | Our Mental Image |

If we were to instead consider our eye's instantaneous dynamic range (where our pupil opening is unchanged), then cameras fare much better. This would be similar to looking at one region within a scene, letting our eyes adjust, and not looking anywhere else. In that case, most estimate that our eyes can see anywhere from 10-14 f-stops of dynamic range, which definitely surpasses most compact cameras (5-7 stops), but is surprisingly similar to that of digital SLR cameras (8-11 stops).

On the other hand, our eye's dynamic range also depends on brightness and subject contrast, so the above only applies to typical daylight conditions. With low-light star viewing our eyes can approach an even higher instantaneous dynamic range, for example.

*Quantifying Dynamic Range. The most commonly used unit for measuring dynamic range in photography is the f-stop, so we'll stick with that here. This describes the ratio between the lightest and darkest recordable regions of a scene, in powers of two. A scene with a dynamic range of 3 f-stops therefore has a white that is 8X as bright as its black (since 23 = 2x2x2 = 8).

Photos on left (matches) and right (night sky) by lazlo and dcysurfer, respectively.

Sensitivity. This is another important visual characteristic, and describes the ability to resolve very faint or fast-moving subjects. During bright light, modern cameras are better at resolving fast moving subjects, as exemplified by unusual-looking high-speed photography. This is often made possible by camera ISO speeds exceeding 3200; the equivalent daylight ISO for the human eye is even thought to be as low as 1.

However, under low-light conditions, our eyes become much more sensitive (presuming that we let them adjust for 30+ minutes). Astrophotographers often estimate this as being near ISO 500-1000; still not as high as digital cameras, but close. On the other hand, cameras have the advantage of being able to take longer exposures to bring out even fainter objects, whereas our eyes don't see additional detail after staring at something for more than about 10-15 seconds.

CONCLUSIONS & FURTHER READING

One might contend that whether a camera is able to beat the human eye is inconsequential, because cameras require a different standard: they need to make realistic-looking prints. A printed photograph doesn't know which regions the eye will focus on, so every portion of a scene would need to contain maximal detail — just in case that's where we'll focus. This is especially true for large or closely viewed prints. However, one could also contend that it's still useful to put a camera's capabilities in context.

Overall, most of the advantages of our visual system stem from the fact that our mind is able to intelligently interpret the information from our eyes, whereas with a camera, all we have is the raw image. Even so, current digital cameras fare surprisingly well, and surpass our own eyes for several visual capabilities. The real winner is the photographer who is able to intelligently assemble multiple camera images — thereby surpassing even our own mental image.

Please see the following for further reading on this topic:

- High Dynamic Range. How to extend the dynamic range of digital cameras using multiple exposures. Results can even exceed the human eye.

- Graduated Neutral Density (GND) Filters. A technique for enhancing the appearance of high contrast scenes similar to how we form our mental image.

- Photo Stitching Digital Panoramas. A general discussion of using multiple photos to enhance the angle of view.

DIGITAL PHOTO RESTORATION

Digital photo restoration can work miracles by turning a faded old family portrait into an image of seemingly modern quality. Alternatively, this process can work in reverse to give a photograph a timeless feel. Performing either of these transformations yourself is not as difficult as it may seem — you just need to know which restoration tools to use.

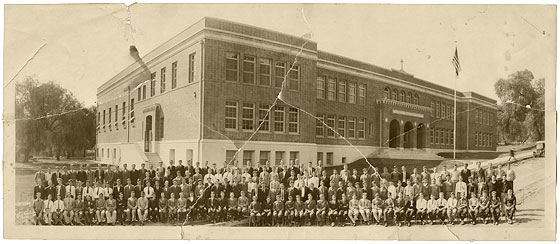

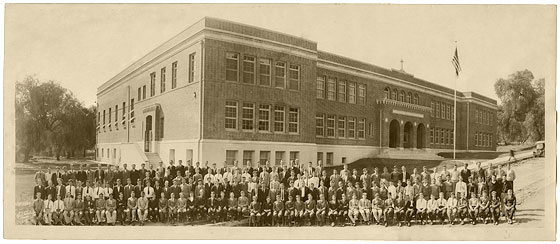

example photo restoration from a scanned print, circa 1900

Although photos can degrade in a variety of ways, this usually includes some combination of fading, color casts (often yellowish), and localized physical damage (such as stains or scratches). Fortunately, photo editing has just the right tools for each aspect:

- Fading: levels, curves, contrast and black level tools

- Color Casts: white balance, color balance and other color tools

- Localized Damage: clone stamp, healing brush and other selective editing tools

Each of these will be discussed separately in the sections below, but before that we need to focus on the scanner itself . . .

IT ALL STARTS WITH THE SCANNER

Before any digital restoration can be performed, the original photo (or film negative) needs to be scanned. This step is critical, since it ultimately determines your restoration's potential. Needless to say, try and use the best scanning equipment and software at your disposal. Everything else being equal, flatbed scanners usually provide much higher quality than similar sheetfed scanners.

Scanning Software. Often whichever software came with your scanner will work fine, especially since this caters to the capabilities of your particular device. However, sometimes this can be too limiting, in which case Vuescan and Silverfast are two notable third-party choices. Silverfast is quite powerful if you happen to have the film negative, while Vuescan is more than sufficient (and inexpensive) for printed photos.

Scanner Settings. Pay particular attention to the following:

- Resolution. In general, use a dot per inch (DPI) resolution of at least 400-600 DPI for prints, and several times this resolution with film negatives. The chosen scanning resolution will depend on the sharpness and focus of the original images, but should ideally resolve the speckles of noise/grain in the print. Try experimenting with different settings so that you can see what the image looks like on-screen.

- Precision. Scan at the highest bit depth possible: 16-bits per channel or 48-bits in total color depth, if available. This way your digital original can withstand more retouching before it begins to show signs of posterization.

- File Type. Save the scan as a TIFF file to maximize detail preservation. If your scanner and software support RAW/DNG (digital negative) files then this is even better.

- Color vs. B&W. Saving black and white photos as grayscale image files can preserve disk space, but this isn't the only consideration. A color scan can sometimes make the restoration process easier — even for a black and white image — since color makes it easier to identify and remove stains which aren't native to the photo.

- Film Negatives. These provide more flexibility — if you are fortunate enough to still have them. However, negatives are often more susceptible to physical damage, so sometimes the original photo is still a better source. Regardless, you will need to either send the negatives off for professional drum scanning, or have a film negative capable scanner.

ARCHIVE THE ORIGINAL SCANNED FILE

Before any editing, first save your original scanned TIFF or RAW/DNG file, and preferably in more than one location. All subsequent editing should be saved in a separate file. Restoration technique, personal preference, intended use and imaging technology all change — potentially requiring a new restoration at a later date (with a photo that has continued to degrade). See the tutorial on archival digital photo backup.

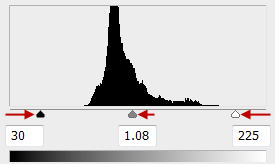

1. FADING: RESTORE CONTRAST & DYNAMIC RANGE

Perhaps the single most common form of degradation is fading. This causes the blacks to become less dark, and the whites to become less light. In other words: the photo begins to lose contrast.

Fortunately, this is also one of the more straightforward ageing artifacts to counteract. The most universal tool for fixing this is probably the levels tool in Photoshop (and other image editing programs). Move your mouse over the before and after labels below to see how the levels tool changes the example photograph:

Levels Adjustment Tool: In general, you'll want to: |

|

|

| Before | After | |

The image histogram needs to extend across as broad a range of tones as possible (from left to right above), but not so much that this detracts from the (presumed) mood of the original photograph. For example, many types of old photos never had fully black or white regions — even when brand new — giving these a softer and subtler appearance.

Other specific tips on contrast-enhancement include:

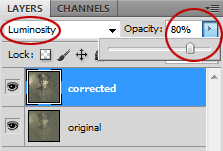

- Duplicate Layers & Opacity. Perform any contrast changes in a duplicate layer; that way the effect can be fine-tuned later using the opacity slider.

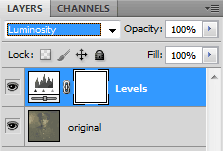

- Adjustment Layers. Alternatively, using adjustment layers is an even better solution. This has the added benefits of minimizing the risk of posterization, permitting quick and easy re-adjustment and reducing the overall file size. For contrast-enhancement in Photoshop, select "Layer > New Adjustment Layer > Levels..." from the top menu.

- Lightness Channel. You can only increase contrast of shades (and not of colors) by performing adjustments on the lightness channel; otherwise this can inadvertently exacerbate color casts, or change the overall hue. If editing in duplicate or adjustment layers, you can achieve this by setting the blending mode to "luminosity."

- Automation. These typically work by stretching the image histogram so that it spans from the far left (black) to the far right (white). The "auto contrast" and "auto levels" features are two common ways to achieve this. While this is usually desirable for modern photos, it may result in too much contrast with an old photograph.

- Curves Tool. This can provide even more specific adjustments, but is a little more difficult to use and can be overkill for many restorations. See the tutorial on the Photoshop curves tool for more on this topic.

The same tools of opacity, duplicate layers and adjustment layers are also helpful in the next two sections . . .

2. COLOR CASTS: WHITE BALANCE & COLOR TOOLS

Fading is one thing when it simply reduces contrast, however it can become harder to restore — and more unsightly — if the photograph develops an unrealistic color cast. This appears as the familiar "yellowing" of photos — even in those from a few decades ago.

The upper portion of the white diaper was clicked on using the dropper tool for the above correction.

Using the "white balance dropper tool" is often the easiest technique since it has the potential to neutralize color casts in a single step. Use the dropper tool on a region which you believe to have been (i) light neutral gray, (ii) receiving most of its illumination from the primary light source and (iii) not tinted by light bouncing off nearby colored objects.

On the other hand, sometimes there just isn't an object which satisfies the above requirements — in which case you'll need to resort to one of the following:

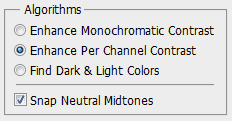

- Automated Correction. This attempts to remove color casts not by using any specific region of a photo, but instead by analyzing the image as a whole. In Photoshop, two ways of achieving this are by selecting (i) "Auto Color" from the image drop-down menu, or (ii) "snap neutral midtones" after clicking the "Options..." button in the levels tool. Be careful though; many of these automated methods attempt to correct both color balance and contrast in a single step. As usual, edit in a duplicate layer so that the effect can be decreased afterwards.

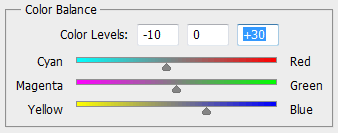

- Manual Correction. This typically involves using the "color balance" (or similarly named) tool to tweak the overall warmth or coolness of a photo, or relative prevalence of magenta versus green. During adjustment, the image is manually inspected until the overall color balance is desirable.

| |

| Cooler | Warmer |

| More Green | More Magenta |

Regardless, achieving just the right color balance can be highly subjective and there isn't always one right answer. When in doubt, it's generally a good idea to err on the side of having a photo that appears slightly warmer versus cooler, or has slightly more magenta as opposed to green coloring. Experiment with the mouseover buttons below the image to the right to see for yourself.

Complications. Perhaps the most common problem is that old photos don't always develop color casts uniformly. This might be due to how the photo was stored, or could even be a result of the chemistry of the photographic paper.

Non-uniform color casts unfortunately complicate the correction process because they require targeted adjustments. For example, if one shifted a photo's overall color balance to remove a yellow cast in the shadows, this would cause the midtones and highlights to appear bluish, or vice versa. In such cases, one needs to apply color corrections selectively, either by specifying highlights or shadows within this color balance tool, or by performing color correction with the curves tool on the individual color channels.

However, other times localized color casts are caused by stains — and not some gradual process affecting the entire image. In that case, one might be better off just blending or removing these regions entirely, as discussed in the next section. . .

3. LOCALIZED DAMAGE: CLONING & HEALING BRUSH

Old photos have likely incurred plenty of imperfections in the form of dust marks, rips or tears, stains, scratches and crinkles. These can distract from the image — particularly if located on or near someone's face:

Original Scan

Original Scan After Cloning & Healing Brush Tools

After Cloning & Healing Brush ToolsConservative restoration using only cloning and healing brush tools; some imperfections still remain.

Move your mouse over the image on the right to directly compare before/after versions.

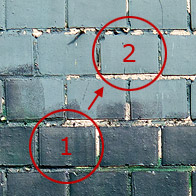

By far the best tools for removing these are the clone stamp and healing brush tools (in Adobe Photoshop and most other editing software). These are in fact the only two tools used to restore the above image. Each tool has the following effect:

|

|

||

| 1 = Source Region 2 = Target Region |

Clone Stamp | Healing Brush | |

|---|---|---|---|

- Clone Stamp Tool. This works by replacing the image content in a target region with a copy from a source region. This is most useful for situations where you need to fully reconstruct all characteristics of a source region, such as when removing a large tear or duplicating a repeating object or pattern.

- Healing Brush Tool. This also works by replacing the content in a target region with that from a source region, except it only replaces texture — while preserving the original color and luminosity. Even though less is being replaced, this often actually makes the healing brush a much more effective tool. This is most useful when removing small or isolated imperfections, and when touching up uneven transitions caused by the clone stamp.

Each tool may require extensive practice until either is used to maximal effect. Both require clicking at least twice: once (while holding ALT/option key) to select the source, then again for the target. General tips and best-practices include:

- Tool Order. Optimal results are usually achieved by using each tool in succession. One can start by replacing all missing content with the clone brush tool, then by doing a final touch-up with the healing brush.

- Source Region. Perhaps the most important aspect is learning to choose the best source region for a given target; ideally the brush size should be no larger than required by the target imperfection, and the source should be as close as possible to the target's lighting and location within the image.

- Brush Hardness. A lower brush hardness setting causes the edges of the brush to blend in better with the image — especially with the clone stamp — but this also causes blurred or washed-out detail if the source and target textures are different.

- Repetition. Take extra care not to give the appearance of repeating features amongst otherwise random texture; this is a tell-tale sign that the cloning and healing brushes have been used, and is surprisingly easy to identify — even by those not familiar with photo editing. Using a diverse range of brush sizes can help make such repeating features less noticeable.

Move your mouse over the image below to see the combined effect of these tools on a large print that was digitally reassembled from torn pieces:

Restoration required extensive cloning since tears were across detailed brick textures. Photo circa 1929.

FINAL WORDS

Photo restorations require many interpretive decisions, so careful judgment is a critical aspect of the restoration process. Before you even touch the photo, ask yourself: what do you hope to accomplish, and which aspects are of highest priority to improve? Do your restorations maintain the original mood of the photo, or is that even important? All of these can influence your choice of several seemingly equal approaches to restoration.

Regardless, take your time! Proper removal of physical imperfections such as stains or scratches can be quite involved, so do not (always) expect miracles with just a few minutes of work.

For similar topics, also see the following tutorials:

- Levels Tool. A relatively simple and easy to use way of controlling image tones.

- Curves Tool. A more powerful but more complicated tonal adjustment tool.

- Digital Photo Editing Workflow. A comprehensive overview of all the typical steps.

DIGITAL PHOTO EDITING WORKFLOW

This article summarizes the most important steps to incorporate into your digital photo editing workflow (aka "post-processing workflow"). It isn't necessarily a procedure that you'll want to implement with all of your photos, but whenever you want to get that "keeper" looking just right, these steps can make all the difference.

|

|

| Before | After |

The "after" image more accurately depicts how the scene appeared in person — a good goal for most photo editing. Only white balance, exposure compensation, shadow recovery and sharpening were applied to produce the "after" image above — all steps described in the workflow below.

OVERVIEW: TOP 10 POST-PROCESSING STEPS

Each is listed roughly in the order that they should be applied:

- White Balance - temperature and tint adjustment sliders

- Exposure - exposure compensation, highlight/shadow recovery

- Noise Reduction - during RAW development or using external software

- Lens Corrections - distortion, vignetting, chromatic aberrations

- Detail - capture sharpening and local contrast enhancement

- Contrast - black point, levels and curves tools

- Framing - straighten and crop

- Refinements - color adjustments and selective enhancments

- Resizing - enlarge for a print or downsize for the web or email

- Output Sharpening - customized for your subject matter and print/screen size

Note: Clicking on any of the steps above (or scrolling down this page) will take you to a quick summary of the most important considerations for each. Within these sections, you can also click on links which will lead you to more in-depth reading on each topic.

The above steps are virtually universal, so most photo editing software should work. If you've captured your images using the RAW file format (highly recommended), then the order of the above steps isn't as important, since they'll be intelligently applied when you develop using your RAW software. Otherwise it's critical that you follow the above sequence — especially with steps involving sharpening, resizing and noise reduction. Be careful though, extreme edits can easily cause image posterization with JPEG files.

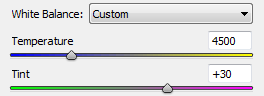

1. WHITE BALANCE

Simply getting the white balance right can often make the single biggest improvement in your photo's colors. An incorrect white balance will give your image a color cast, and can dramatically reduce both contrast and color saturation:

-

Controls. First adjust the "temperature" slider, then refine using the "tint" slider. The former controls the relative warmth of the image, whereas the latter controls the magenta-green shift.

- Problem Scenes. Paradoxically, cameras typically make the biggest white balance mistakes with scenes that are the most dramatically lit (and often could have benefited the most). Pay extra attention to white balance when shooting sunsets, indoor lighting and low-light photos, etc.

- Other Tools. If there's an object somewhere in the image which ought to be neutral gray, you can also use a "white point dropper" (or similarly named tool) to automatically set the white balance so that this object's color cast is subtracted from the entire image.

2. EXPOSURE: COMPENSATION & RECOVERY

This step presumes that you've done everything possible to get an accurate exposure at the time of capture. However, this isn't always possible (or practical).

Under-Exposure

Under-Exposure(clipped shadows in blue)

Ideal Exposure

Ideal Exposure Over-Exposure

Over-Exposure(clipped highlights in red)

Fortunately, exposure can be fine-tuned by using the "exposure compensation" adjustment tool. Some general tips include:

- Image Histogram. Use this as an objective guide.

Refer to the following tutorial: Image Histograms: Tones & Contrast - Viewing. View the photo at a small size on-screen to make it easier to judge exposure. Also keep in mind that exposure isn't something that necessarily has a "right" answer; it often also depends on your artistic intent and just "looks right."

Refer to the tutorial on digital exposure techniques for more on this topic. - Extreme Tones. Pay careful attention to whether there are any blown highlights or lost shadow detail. If your software supports it, you might be able to recover these by using the "fill light," "recovery" or "black point" tools.

- Limitations. Avoid excessive compensation; if you increase exposure too much, noise will become visibly worse in the shadows, whereas decreasing it too much will make blown highlights more apparent. In either case, this probably means that your original exposure was way off, and you're likely better off working on a different photo.

3. NOISE REDUCTION

If your image was taken at a high ISO speed, it will likely benefit from noise reduction:

High Image Noise Original

High Image Noise Original(taken at high ISO)

Photo on Left

Photo on LeftAfter Noise Reduction

Best Case Scenario

Best Case Scenario(taken at low ISO)

- Order. Noise reduction is most effective when applied before any other image editing (with the exception of steps 1 & 2 above: exposure compensation and white balance).

- Types. Image noise has many types; some are easily removed while others aren't. Fortunately, noise from a high ISO speed is the type that is most easily addressed. Refer to the tutorial on image noise for a background on this topic.

- Limitations. Aim for noise reduction as opposed to complete removal, since the latter can make subjects appear unnaturally smooth. Some noise is OK and even expected.

- Dedicated Software. For problematic images, it is worth experimenting with dedicated noise reduction software such as Neat Image, Noise Ninja, Grain Surgery, or others.

- Sharpening. Noise reduction often goes hand in hand with sharpening, so this step may need to be applied in conjunction with step 4 (depending on your software). This is because they can offset eachother: sharpening increases noise, but noise reduction often decreases sharpness.

- In special cases, another technique is Noise Reduction by Image Averaging

4. LENS CORRECTIONS

The three most problematic (but correctable) lens imperfections include:

- Vignetting is most prevalent when you're using low f-stops, although some lenses are also more susceptible to this than others. A little vignetting is often beneficial, since this can draw attention to the image's center and make the frame's edges less abrupt. Also be aware that correcting for vignetting increases image noise near the corners. However, if your vignetting is actually due to physical blockage (such as a lens hood or filter), then this unfortunately cannot be corrected.

- Distortion will be most prevalent when you're using wide angle or telephoto lenses (or are using a zoom lens at either extreme). Don't try to fix this unless it's clearly visible, since doing so can slightly reduce corner resolution and alter your composition. Distortion is often acceptable with landscapes, but not in architectural photos.

- Chromatic Aberration will be most apparent at low f-stops, near the corners of your image and in regions with high contrast detail. When correcting for CA, use a high contrast edge near the image's extreme corner as a guide.

However, be aware that not all types of CA can be easily removed. If the CA doesn't appear to be helped using standard tools, you might also want to try other settings. For example, Lightroom and Adobe Camera RAW have a "fringing" tool which can reduce the more persistent types of CA (but potentially at risk of reducing detail).

For more, also see the tutorial on Improving Image Quality with Lens Corrections

5. DETAIL: SHARPENING, CLARITY & LOCAL CONTRAST

The aim of this step is to offset any inherent softening caused by your camera's sensor and lens ("capture sharpening"). It's also important that it be applied conservatively since you'll also be applying "output sharpening" in a later step. Sharpening should be performed with care because it can exacerbate other image quality issues (such as noise, chromatic aberrations, etc.). However, when done right, it can make a tremendous difference in the perceived quality of your photo:

For a background and specific techniques, refer to these tutorials:

- Guide to Image Sharpening (practical overview)

- Sharpening Using an Unsharp Mask (background on how the USM tool works)

- Understanding Sharpness (background on what factors contribute to sharpness)

- Using Local Contrast Enhancement (a technique for improving clarity)

6. CONTRAST: LEVELS & CURVES TOOLS

Images taken into the sun or near a bright indoor light source often suffer from low contrast (since these are leading causes of lens flare). Improving contrast often gives the long sought after "pop" or 3D look to your images:

- Too much contrast can make your subject look unrealistic if this was actually a trait of your scene (such as photos in the fog or haze).

- Higher contrast can also make colors appear more saturated.

Also refer to the following tutorials:

- Using the Levels Tool (in Photoshop & Other Image Editing Software)

- Using the Curves Tool (in Photoshop & Other Image Editing Software)

7. FRAMING: ROTATE & CROP

The vast majority of snapshots can be dramatically enhanced simply by cropping them to strengthen their composition. While there's no universal rules, some good principles are outlined in the tutorial on composition: the rule of thirds.

You may also want to crop your image so that it exactly matches a given print size (such as 8x10 inches). The aspect ratio of your crop can often be specified within your photo editing software — making this process much easier.

8. REFINEMENTS - COLORS & SELECTIVE ENHANCEMENTS

This is really a catch-all category, but typical adjustments might include:

- Colors - saturation, vibrance and other color adjustments. However, these are often overdone, and frequently aren't necessary if white balance, exposure and contrast have all been properly set.

- Selective Enhancements - spot removal of dust/blemishes (as shown above), creative sharpening (such as at the eyes of someone in a portrait) and selective noise reduction (in otherwise smooth areas such as the sky or skin). Important tools include: the healing brush, clone tool, layer masks and adjustment brushes. Consult your software's help file or manual to browse what options are available.

Once these have been applied, make sure to save a copy of your image, since all subsequent editing steps only depend on how and whether you intend to share this photo. This way you don't have to redo everything each time you plan on using your image for something else. Regardless, at this stage the image should now appear finished when viewed on your screen.

9. RESIZING: UPSIZE FOR PRINT, DOWNSIZE FOR WEB

Whenever you alter the display size of your image, you need to resize the file (using a process called "digital image interpolation"). The resize strategy can be quite different, depending on whether you want to make it larger or smaller.

When enlarging your image for a print:

- Always try to perform enlargements yourself instead of having this done by the printer

(otherwise it's easy to accidentally create prints which appear digital/pixelated). - If you see haloes around sharp edges, then your earlier capture sharpening may have been applied too aggressively (or with too high a radius value).

- Also refer to this tutorial: Optimizing Digital Photo Enlargement

When downsizing your image for the web or email display:

- Avoid introducing non-image patterns such as the moiré artifacts shown below.

- Also refer to this tutorial: Image Resizing for the Web and Email

Original Image

Original Image Downsized 50%

Downsized 50%(unrealistic moiré artifacts)

Either way, what's often even more important than how you resize is that you make sure to follow this up with output sharpening (in the next step).

10. OUTPUT SHARPENING

Output sharpening is generally the very last image editing step applied to an image. Its settings are therefore customized for a particular output device, which may include special considerations based on the size, type and viewing distance of a print. Output sharpening can also offset any softening caused by resizing an image for the web or e-mail.

Refer to the guide to image sharpening for more on this topic.

OTHER RECOMMENDATIONS

- Backups. Once you're done, it's a good idea to make sure that you're properly archiving your digital photos using backup files. It would be a shame to lose all of this hard work spent in photo editing (and in the original photo capture).

- Monitor Calibration. The time spent photo editing is only beneficial if what you see on your screen is accurate. This is absolutely critical. If you haven't done so already, see the tutorial on monitor calibration for digital photography for more on this topic.

NEUTRAL DENSITY FILTERS

Neutral density (ND) filters reduce the amount of light entering the camera, enabling a longer exposure time than otherwise possible. This can emphasize motion, or make an otherwise tumultuous scene appear surreal and quiescent. Alternatively, an ND filter also enables larger apertures, which can produce a shallower depth of field, or achieve a sharper photo. Either way, this is a useful and often under-appreciated filter that deserves a deeper look. ND filters are also one of the easiest filters to use, and their effect cannot be replicated digitally — at least not with a single shot.

Exposure time:

1/8 second at f/10 and ISO100

Exposure time: 30 seconds at f/10 and ISO100

Examples courtesy of kyle kruchok.

OVERVIEW

An ND filter is nothing more than a semi-transparent piece of glass that gets placed in front of your lens. What makes it special, however, is that it obstructs a precisely controlled fraction of incoming light, and does so uniformly — thereby not altering image contrast or sharpness. The obstruction also aims to be equal across the visible spectrum, thereby not introducing a color cast (although this isn't always the case). This last characteristic also happens to be why it's called a neutral density filter.

Example of a screw-on ND filter.

Even though they might appear gray or even opaque to our eyes, this isn't how your photo will appear; the camera's metering automatically compensates by letting in more light. However, the viewfinder will still appear very dark, so photographers often compose their image prior to placing the filter in front of the lens.

ND filters are specified by their light-reducing ability, where stronger filters appear as darker shades of gray. Some common specifications are summarized below:

| Filter Strength (in f-stops) |

Filter Terminology: | |||

|---|---|---|---|---|

| Light Reduction | Density | |||

| Common Uses | ||||

| 2 | 4X | 0.6 ND | → | Modest increases in exposure time, such as with waterfalls. |

| 3 | 8X | 0.9 ND | ||

| 10 | ~1,000X | 3.0 ND | → | Extreme increases in exposure time,such as blurring in broad daylight. |

| 13 | ~10,000X | 4.0 ND | ||

| 20 | ~1,000,000X | 6.0 ND | ||

Note: the light reduction factors above can also be thought of as exposure time multipliers.

Many other intermediate strengths exist, but high precision typically isn't needed with ND filters. One can often instead adjust the aperture, ISO or shutter speed by one stop without substantially changing the image.

Technical Note: Recall that each "stop" of light-reduction corresponds with a halving of light. A given filter strength therefore passes only 1/2strength of the initial incoming light, where "strength" is the filter strength in stops. For example, a 3-stop ND filter therefore only passes 1/8th the incoming light (since 1/23 = 1/(2*2*2) = 1/8).

SHARPER & LONGER EXPOSURES

Neutral density filters can be used to create any combination of (i) a longer exposure time, (ii) a shallower depth of field and (iii) a sharper photograph. Of these three, the first is by far the most common application, so that's where we'll start.

|

|

| 2 seconds | 1/10 second |

Columbia River Gorge, Oregon

Longer exposure times can achieve a wide variety of artistic effects, including softening the appearance of turbulent water, blurring waves of blowing grass, or emphasizing motion within a crowd of people. For a full discussion of these and other examples, see the tutorial on using shutter speed creatively.

However, let's focus on a specific waterfall example. Without a filter, one would use both the smallest aperture and the lowest ISO speed available. With a waterfall under soft daylight, f/22 and ISO100 might yield an exposure of 1/10 second. Unfortunately, not only is this duration insufficient, but the f-stop also had to be increased to the point of reducing sharpness due to diffraction.

Using an ND filter allows you to address both of these problems, but the extent to which either is improved depends on how you choose to allocate its effect. With a 5-stop ND filter, the same settings would yield a 32X longer exposure time — giving the water a much silkier appearance. Alternatively, one might feel that a 16X (4-stop) exposure time increase is sufficient when weighed against the potential for having a sharper photo, and could instead also decrease the f-stop to f/16.

How long of an exposure does one typically need? For full effect, multi-second exposures are usually a must. These can render clouds as streaks in the sky, blur moving people beyond recognition, or make waves appear as a uniform, low-laying mist. However, this depends on the nature of the motion, the amount of subject magnification and the desired effect. The key is lots of experimentation.

Exposure time: 60 seconds. Photo courtesy of colin southern.

During daylight, achieving this effect usually requires having a 10-stop or greater ND filter strength, which blocks all but a staggering 1/1000th of incoming light. Try revisiting the exposure settings from some of your past images to see what ND filter strengths would've been needed to achieve multi-second exposures. For example, if a given series of landscapes used a 1/50 second shutter speed at optimal aperture and ISO speed, then these would've required a 10-stop ND filter to extend this exposure time to 20 seconds.

SHALLOWER DEPTH OF FIELD

Although ND filters are primarily used to achieve longer exposures, a less common application is to enable a shallower depth of field in very bright light. For example, most SLR cameras have a maximum shutter speed of 1/4000 second, so a subject in direct sunlight might therefore require an f-stop greater than about f/4.0 (at ISO100). With a 2-stop ND filter, one could reduce this to f/2.0 — yielding a dramatic improvement in background blur and subject isolation.

Photo courtesy of alex campos. Note: above example doesn't use an ND filter, but does illustrate a situation where one could potentially help (depending on the camera's max shutter speed).

On the other hand, these situations are rare, and can usually benefit more from photographing the subject under dimmer (and likely less harsh) lighting. These situations are also unlikely to require anything stronger than a 2 or 3-stop ND filter.

DIGITAL NEUTRAL DENSITY FILTERS

Although ND filters cannot be replicated digitally, certain scenes and exposure times can be adequately emulated by employing a technique called image averaging. This works by combining several separate photos in a way that simulates a single, longer exposure:

|

||||

| Choose Photo Averaging: | None | 2X | 4X | 16X |